ICONIC PROJECTS

Our ICONIC projects

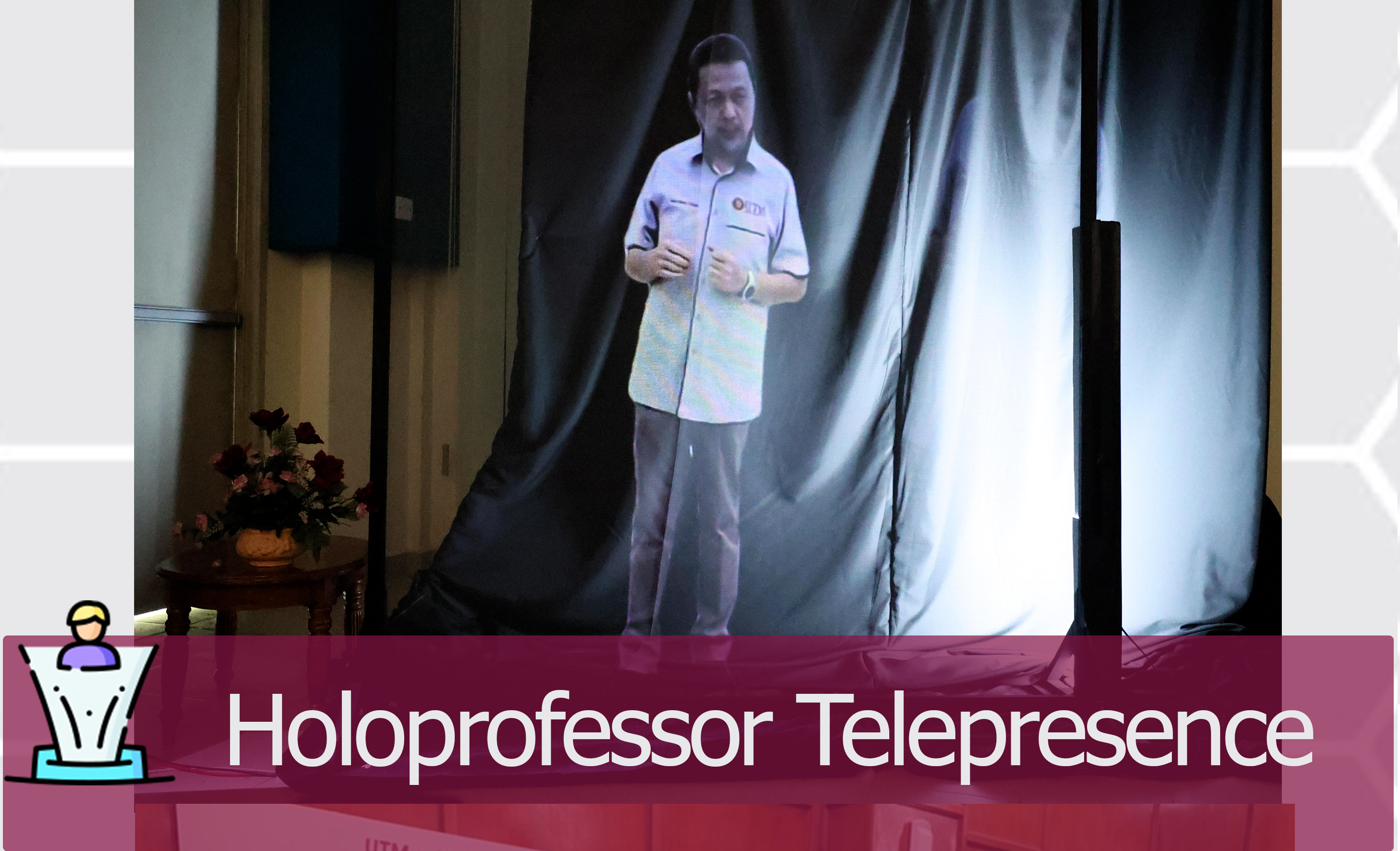

Holo-Professor

This is our very own UTM’s first home-grown 3D holographic telepresence technology. Our ‘Holo Professor’ is not a pre-recorded video but a real-time and live speech.

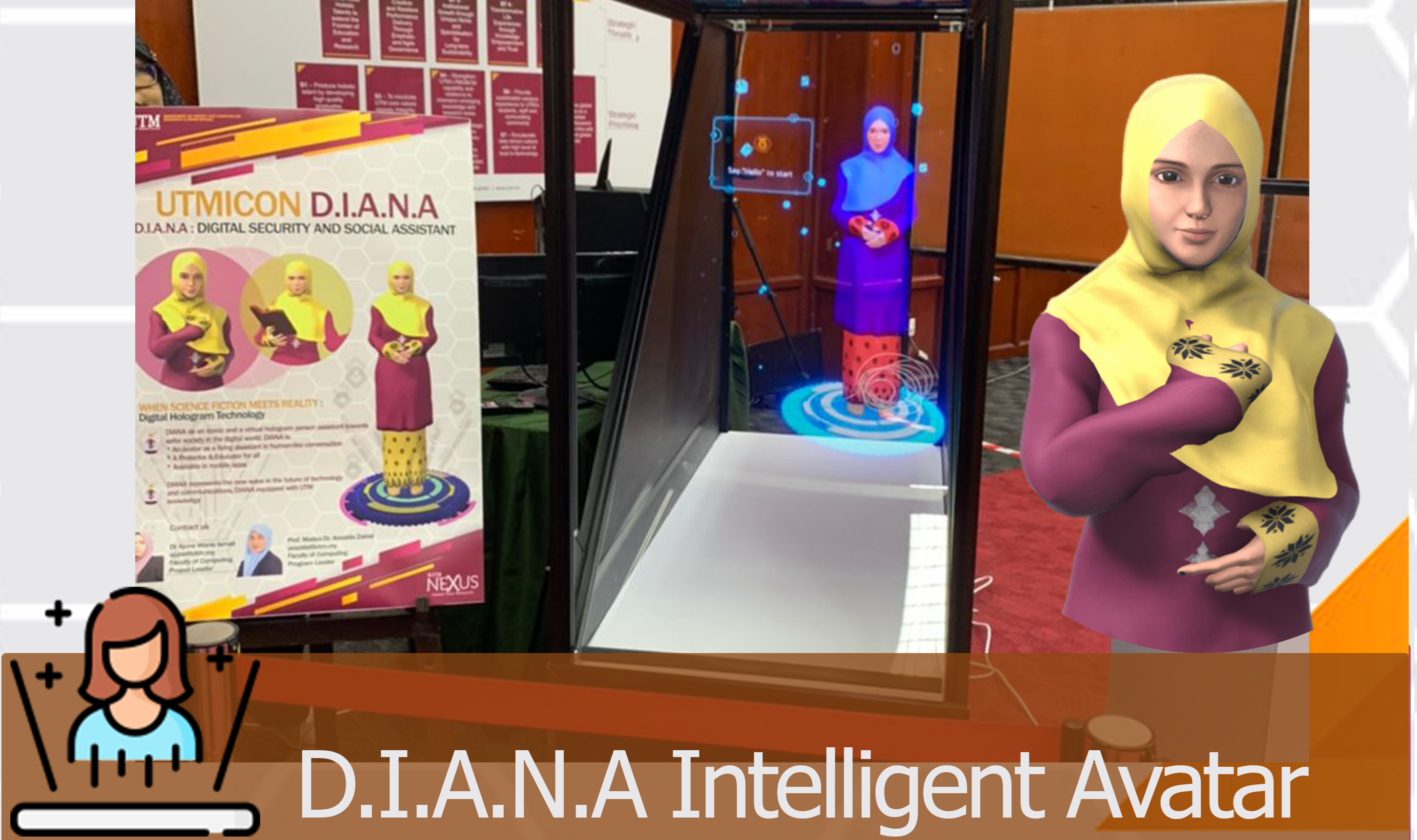

UTM DIANA HOLOGRAM AVATAR

D.I.A.N.A stands for Digital Security and Social Intelligent Assistant. She is a virtual female human-like avatar with our local Malaysian identity appearance. Furthermore, she can promote our unique Malaysian culture and have the potential to become our digital ambassador. >> Media Cover Berita Harian

XR Menu for Restaurant

Augmented menus can also lower the need to speak to a waiter physically, as plenty of information that would usually be beyond the scope of a physical menu can easily be included in an AR experience. Integrate this into a mobile ordering app and you can reduce the need for customer-to-waiter contact even further.

AUGMENTED / MIXED REALITY PROJECTS

Augmented Reality User Interfaces using fingertips real hands, multimodal interaction using gesture and speech input, AR Games using HMD and more..

ARGarden

ARGarden is an application of 3D outdoor landscape design using handheld AR. This project implement the multi-user interaction to invoke the interaction among participated user collaboratively in handheld AR to design the outdoor garden AR environment.

AR and VR Remote Collaboration Mobile Game SDK

In this work, we develop a mobile game SDK for remote collaboration between AR and VR interface. Remote collaboration between user in different interfaces promotes to leverage the strength of AR and VR.

AR Battleship Board Game

Bringing the existing Battleship Board Game to life with AR technology. In this work we develop an AR battleship board game to enhance the user experience. The AR environment is projected with holographic display. The multi-user interaction is invoked to perform the gameplay interaction.

MR Deco - Interior Design MR Application

In this work, we develop a Mixed Reality (MR) application for interior design. A user should be able to interact intuitively with the virtual content in real-time. This project identifies the integration for both AR and VR fusion for the MR environment.

Finger-Ray Interaction in Handheld AR

Implementation of real hand gesture as interaction tools in handheld AR interface. The interaction is performing selection while being far away from the 3D object and performing selection task towards object that is being occluded.

3D Telepresence Remote Collaboration in XR

In this work describes the human teleportation in the xR environment using the advanced RGB-D sensor devices. It explains the phases to develop the real human teleportation in xR through the proposed collaborative xR system.

Bio-WTiP

In this work, we develop a Biology lesson handheld AR application using tangible interaction. This project implement the AR feature-based tracking technique in order to display the AR environment. The tangible interaction is invoke through placing the various markers to interact with other marker.

Mathematics Game for Handheld AR

In this work, we develop a Mathematics game using handheld Augmented Reality (HAR) for primary school children as an alternative method in learning Mathematics as schools are moving to digital learning to contain the spread of Coronavirus Disease 2019 (COVID-19).

Flexible Human Height Estimation in mobile AR

Human height measurement approach using AR. World tracking technique and Visual Inertial Odometry is used for human height estimation. Designed algorithm use Golden Ratio rules to estimate human height from the lower part of human knee and display in AR.

VoxAR Modelling Editor

Brings existing physical copies of books to life using AR. VoxAR is a 3D Modelling Editor Using Real Hands Gesture for Augmented Reality. Leap motion device with AR to model 3D object and save the object in .fbx format

Holographic Projection in Mixed Reality

We use hologram display to project the Mixed Reality interior design. This project is for Mixed Reality environment but Z-hologram is used for holographic projection.

Mixed Reality with Real Hand Gesture

In this work we describe an advantage of multimodal AR interfaces using gestures and speech input which allows real-time 3D interaction is modified intuitively by engaging with multimodal fusion.

AR Coloring Book

Brings existing physical copies of books to life using AR. A texturing process that applies the captured texture from a 2-D colored drawing to both the visible and occluded regions of a 3-D character in real time.

AR Toy

We indicate the 3D object or real object as an input on Handheld AR interface, combining with speech recognition it is a potential interaction method for a user to use their speech to interact with virtual object in the AR scene.

Multimodal AR Interaction

In this work we describe an advantage of multimodal AR interfaces using gestures and speech input which allows real-time 3D interaction is modified intuitively by engaging with multimodal fusion.

Interactive AR Popup Book

3D hand interface provides users a seamless interaction in AR environments using real hands as an interaction tool. There is no need to have physical markers or devices for interaction.

AR Interior Design

Gaze interaction in a context sensing to view information over time. Users to create their own information for places or contents and interact with the physical world in ways not experienced before.

Fingertips AR Interaction

Mobile Augmented Reality User Interfaces using fingertips real hands instead touch screen. Contribution: allows user to interact with virtual 3D models in the work area using interaction metaphors in mobile environment.

AR Jenga Game using HMD

A vision capability enables the immersion and interactivity in mobile augmented reality to improve gaming experiences by enabling game physics to apply to real world surfaces and objects for AR environments – Developer : Solomon

AR Autonomous Agents

Character Animation Using Augmented Reality Technique for Autonomous Agents, Embodied autonomous agents – use of animated characters in augmented reality applications.

VIRTUAL REALITY PROJECTS

Fully immersive environment through Virtual Reality User Interfaces using Oculus Touch devices, Leapmotion and Depth sensors Kinect to produce natural interaction and enhance the effectiveness in interaction.

Oculus Touch Experience

Oculus with Leap Motion

VR Manufacturing

VR Ancient Malacca

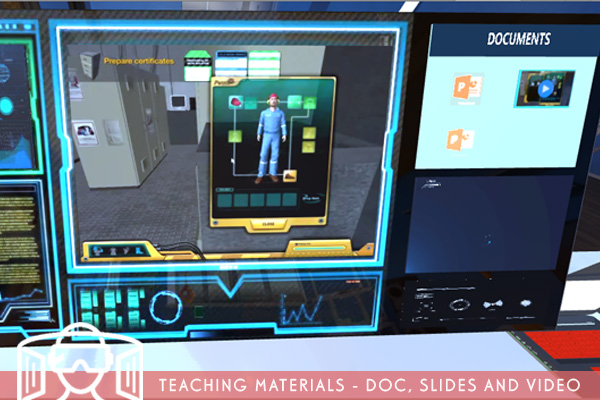

Virtual Mooc Teaching

Virtual Classroom

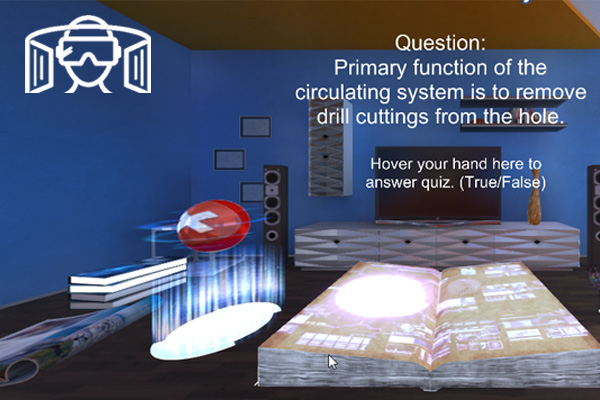

VR Assessment

Virtual Whiteboard

CLASSROOM OF THE FUTURE : The exciting future of education with virtual reality

In response to the challenge of the 4th Industrial Revolution and in line with the MoHE’s intention to Redesigning Higher Education, we created VR classroom for students to experience the immersive learning environment. As a case study, Oil and Gas is chosen and Human Anatomy study is explored throughout the development of classroom of the future. With the cross-discipline collaboration between Faculty of Chemical and Energy Engineering and Faculty of Computing, this project is delivered the content where the UTM students able to have onsite visit to the offshore platform during their studies in UTM. Visiting offshore is practically impossible for students to participate with the actual environment due to the safety matters and cost. Through this project, it brings the students with a new learning experience.

Project’s members: Dr. Ajune Wanis (VR/AR), Assoc. Dr. Mohd Shahrizal (VR), Dr.Masitah (HCI), Dr. Mazura (Chemical Engineering), Dr. Zaki Yamani (Chemical Engineering), Dr. Wan Rosli (Petroleum Engineering)

Technical Team : Dr. Ajune (VR/AR), Yahya Fekri (Senior Engineer VR), Asyraf (Gesture Interaction), Azhar (Content Developer and animation), Shukri (UI/UX Effects and sounds), and Affendy (Interface/Integration)