Abstract: Comparative evaluations of information retrieval (IR) systems using test collections are based on a number of key premises, including that representative topic sets can be created, suitable relevance judgments can be generated, and that systems can be sensibly compared based on its retrieval correctness over the selected topic. Several contributions to this experimental methodology will be presented in this talk by addressing realistic issues/problems faced by IR researchers using test collections for evaluating information retrieval systems. The talk will also briefly cover some recent work of the speaker in crowd sourcing, tweets, QA systems and IoT in relation to IR.

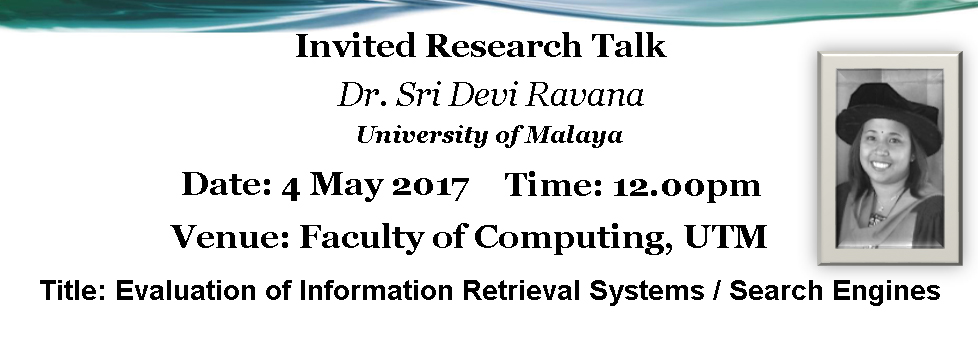

Abstract: Comparative evaluations of information retrieval (IR) systems using test collections are based on a number of key premises, including that representative topic sets can be created, suitable relevance judgments can be generated, and that systems can be sensibly compared based on its retrieval correctness over the selected topic. Several contributions to this experimental methodology will be presented in this talk by addressing realistic issues/problems faced by IR researchers using test collections for evaluating information retrieval systems. The talk will also briefly cover some recent work of the speaker in crowd sourcing, tweets, QA systems and IoT in relation to IR.Biography: Sri Devi Ravana received her Bachelor of Information Technology from Universiti Kebangsaan Malaysia in 2000. Followed by the Master of Software Engineering from University of Malaya, Malaysia and PhD degree from The University of Melbourne, Australia, in 2001 and 2012, respectively. Her research interests include IR evaluation, social search and QA systems. She has received a couple of awards in international conferences and published extensively in ISI indexed journals. She is currently a Senior Lecturer at the Department of Information Systems, University of Malaya, Malaysia.